The Complete Guide to Data Orchestration Tools for Modern Businesses

In today’s hyper-connected world, businesses collect enormous amounts of both qualitative and quantitative data across countless touchpoints. Yet without synchronization, this information remains fragmented stripping customer interactions of context, relevance, and precision.

And this is where data orchestration tools step in!

They unify and automate data flows across systems, preserving contextual awareness throughout the customer journey. By merging every data signal into a unified profile graph, a continuously evolving data fabric that fuels real-time intelligence and smarter decisions.

Data, when orchestrated, becomes more than information — it becomes intelligence.

Positioned within the Data Layer & Customer Profile pillar, data orchestration forms the backbone of advanced frameworks like Agentic AI Orchestration, which power platforms such as Zigment. Unlike traditional integration or ETL solutions, modern orchestration tools act as an intelligence layer coordinating decisions, streamlining workflows, and enabling adaptive, personalized customer experiences.

After all, data orchestration tools solve what spreadsheets, manual workflows, and hopeful thinking never could bringing coherence, automation, and intelligence to the heart of modern business operations.

What is Data Orchestration?

Data orchestration is the automated process of collecting, organizing, and coordinating data from multiple systems into a unified, usable flow. It ensures that the right data reaches the right place at the right time, enabling seamless analytics, smarter automation, and real-time decision-making across business operations and customer touchpoints.

A data orchestration platform doesn't just schedule jobs.

It understands relationships between data sources, manages complex dependencies, monitors data quality, and adapts workflows based on changing conditions all without manual intervention!

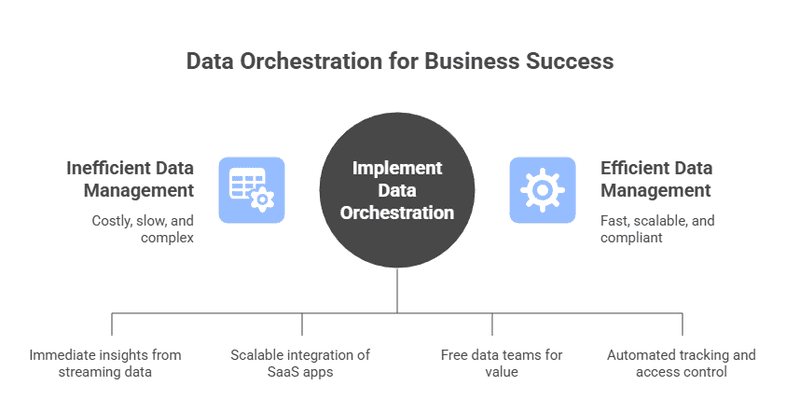

Why Businesses Need Data Orchestration Tools Today

You need workflow orchestration for data engineering because your current setup is costing you money, time, and competitive advantage. Here's how:

Real-time decision-making isn't optional anymore.

Your competitors are personalizing experiences in milliseconds while you're waiting for overnight batch jobs to complete. Real-time data orchestration platforms process information as it arrives streaming customer behavior, transaction data, inventory levels and make that intelligence immediately actionable.

Complexity has exploded.

The average enterprise uses 110+ SaaS applications. Each generates data. Each needs to talk to others. Managing these connections manually? That's not scalable. Cloud-native data orchestration tools handle this complexity natively, with pre-built connectors and API integrations that just work.

Data teams are drowning

According to recent surveys, data engineers spend 40% of their time on operational maintenance monitoring jobs, fixing broken pipelines, hunting down data quality issues. Enterprise data orchestration solutions automate these operational burdens, freeing your team to actually build value instead of fighting fires.

Governance and compliance aren't negotiable

GDPR. CCPA. SOC 2. Your data orchestration governance and compliance features need to track lineage, enforce access controls, and maintain audit trails automatically. Manual processes introduce risk; orchestration eliminates it.

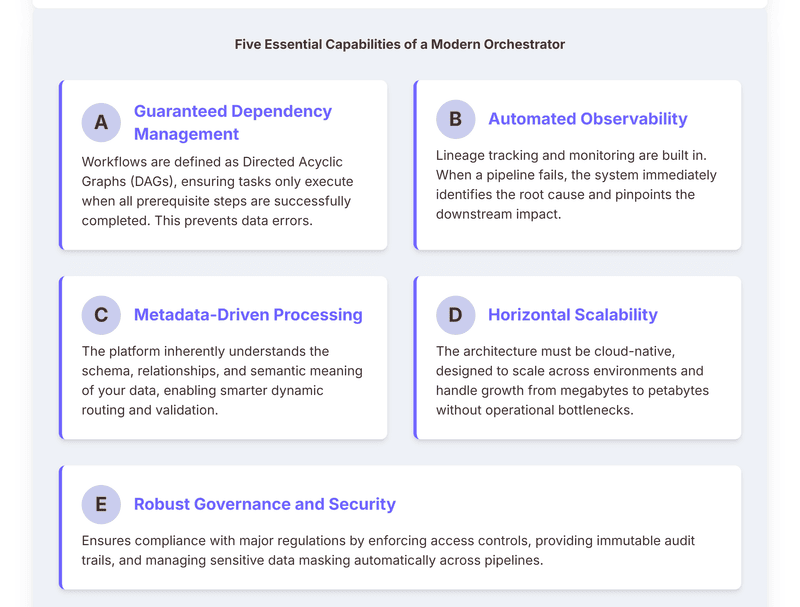

5 Core Features of a Data Orchestration Tool

Not all platforms are created equal. Here's what separates modern orchestration from glorified schedulers:

Workflow Orchestration for Data Pipelines

Define complex, multi-step workflows using directed acyclic graphs (DAGs). Dependencies are explicit Task C never runs until both Task A and Task B complete successfully. This prevents downstream corruption and makes debugging infinitely easier.

Metadata-Driven Orchestration

The system understands your data, not just your jobs. Metadata-driven data orchestration tracks schemas, relationships, and business context, enabling smart decisions about processing order, data quality checks, and impact analysis when things change.

Five core capabilities of modern data orchestration tools

Orchestration Automation and AI-Powered Intelligence

Modern platforms use AI-powered data orchestration to predict failures before they happen, optimize resource allocation dynamically, and even suggest workflow improvements based on historical patterns. It's proactive rather than reactive.

Scalable Data Orchestration Architecture

Whether you're processing gigabytes or petabytes, the platform scales horizontally. Hybrid cloud data orchestration services let you leverage on-premise systems alongside cloud resources, optimizing for cost and performance simultaneously.

Observability and Lineage Tracking

When something breaks (and eventually, something will), you need to know exactly what happened, where, and why. Data lineage shows upstream and downstream impacts. Detailed logs pinpoint root causes. Alerting is intelligent, not noisy.

The Data Orchestration Tools Market: Key Players and Approaches

The landscape is crowded but falls into distinct categories:

Open Source Powerhouses

Apache Airflow dominates here with massive community support and ultimate flexibility. It's code-first, Python-native, and infinitely customizable. The catch? You're managing infrastructure, upgrades, and scaling yourself. Other open source data orchestration frameworks like Prefect and Dagster offer more modern APIs and better developer experience but require similar operational overhead.

Cloud-Native Solutions

AWS Step Functions, Azure Data Factory, Google Cloud Composer—these integrated data orchestration and automation platforms excel when you're all-in on a single cloud provider. Deep integration with native services. Managed infrastructure. The tradeoff is vendor lock-in and sometimes limited flexibility for complex workflows.

Enterprise Platforms

Tools like Informatica, Talend, and IBM DataStage target large organizations needing extensive governance, support contracts, and integration with legacy systems. Powerful but expensive. Implementation often takes months, not weeks.

Modern, Asset-First Platforms

Newer entrants like Dagster focus on data assets rather than just tasks. This asset-first orchestration approach treats datasets as first-class citizens, making data quality and lineage central to workflow design rather than afterthoughts.

Specialized Solutions

Some platforms target specific use cases. Marketing data orchestration tools focus on customer journey orchestration and campaign workflows. Others optimize for specific industries or data types.

The reality?

Most enterprises use multiple tools. Airflow for data engineering pipelines. A cloud-native option for simple workflows. Maybe a specialized platform for customer engagement.

Or you could consolidate around intelligence that actually understands your customers.

Choosing the Right Orchestration for Your Needs

Here's the uncomfortable truth: the "best" tool depends entirely on context. Let's make this practical.

Start with your team's skillset. If your data engineers live in Python, Airflow or Prefect makes sense. If they prefer low-code interfaces, look at cloud-native options or enterprise platforms with visual designers.

Consider operational capacity. Be honest: do you have bandwidth to maintain infrastructure? Open source data orchestration tools offer maximum control but require ongoing operational investment. Managed services cost more upfront but save engineering time.

Evaluate your data architecture. Already deep in AWS? Step Functions might suffice for simpler needs. Running a hybrid infrastructure? You need hybrid cloud data orchestration services that span environments seamlessly.

Think about scale trajectory. That workflow handling 100 GB today might need to process 10 TB next year. Choose a scalable data orchestration architecture that grows with you, not against you.

Factor in compliance requirements. If you're in healthcare, finance, or handling EU customer data, orchestration of data workflows and pipelines must include robust governance, audit trails, and access controls. Not all platforms handle this equally.

Best Practices for Using Data Orchestration Tools

Having the tool doesn't mean you're using it well. Here's what separates mature orchestration from chaos:

Design idempotent workflows. Every task should produce the same result if run multiple times. This makes retries safe and debugging predictable. No side effects, no unexpected state changes.

Embrace incremental processing. Don't reprocess everything every time. Intelligence-led data orchestration loads only what's changed, dramatically improving efficiency and reducing costs.

Version control your workflows. Treat orchestration definitions like code because that's what they are. Git integration. Code review. Testing in lower environments before production deployment.

Build observability from day one. When (not if) something fails at 2 AM, you need to know immediately what broke, why, and what business processes are affected. Data lineage and dependency graphs become your troubleshooting superpower.

Implement circuit breakers. If a source system is down, don't hammer it with retries every minute. Orchestration tools for data transformation should fail gracefully and alert humans when intervention is needed.

Test data quality at boundaries. Validate data as it enters your system, not after transformation. Catch schema changes, null values, and data anomalies before they corrupt downstream processes.

How Zigment Redefines Data Orchestration for Customer Engagement?

Zigment transforms fragmented interactions into continuous understanding, allowing businesses to engage with customers not as data points, but as dynamic conversations in progress.

In a world where attention spans are short and expectations are instant, Zigment ensures your business responds not just quickly, but intelligently because real engagement doesn’t happen on a schedule; it happens in the moment.

Its agentic orchestration framework empowers AI agents to make autonomous decisions. When a customer reaches out, Zigment dynamically retrieves context, determines optimal responses, and coordinates across channels without relying on predefined workflows or batch jobs.

Zigment interprets intent, sentiment, and behavioural history in real time, turning static records into living intelligence.