Data Orchestration: Definition, Framework Benefits, Trends And Innovations

“Data is the new oil.” But what good is oil if it’s stuck in the ground?

Without coordination, your data sits idle in silos, messy and underused.

And that’s where data orchestration steps in!

It’s the silent conductor ensuring every data process collection, transformation, and delivery plays its part in perfect time.

Let's break it down simply. No jargon. No theory dumps. Just a clear understanding of what data orchestration really is, why it matters, and how you can start using it today, especially when it comes to customer data management.

What Is Data Orchestration?

Data orchestration is the process of managing, scheduling, and coordinating data across multiple systems to ensure it flows seamlessly between sources, transformations, and destinations.

Think of it like a conductor leading an orchestra every tool, process, and dataset plays in harmony. Without orchestration, teams end up manually moving data, reconciling formats, and firefighting broken pipelines.

In practice, orchestration tools:

Automate repetitive tasks like ingestion, transformation, and delivery.

Manage dependencies so workflows execute in the correct order.

Monitor pipeline health to ensure reliable and timely data movement.

Improve collaboration between data engineers, analysts, and business users.

The result? Faster insights, cleaner data, and less time fixing broken workflows.

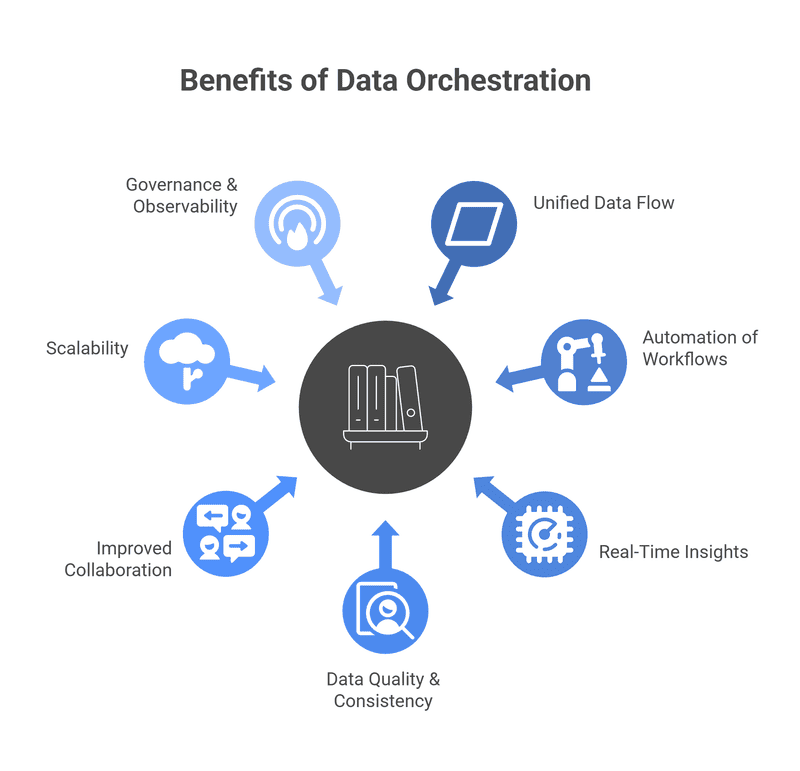

Key Benefits Of Data Orchestration

Unified Data Flow

Data orchestration connects all your tools, sources, and storage systems ensuring information flows seamlessly across the organization.

Automation of Workflows

It eliminates repetitive manual data handling by automating extraction, transformation, and loading (ETL) processes.

Real-Time Insights

With synchronized pipelines, teams get access to up-to-date data accelerating analytics and decision-making.

Better Data Quality & Consistency

Orchestration enforces standardized processes and error handling, reducing inconsistencies and data silos.

Improved Collaboration

Teams across data engineering, analytics, and business functions work from a single source of truth breaking down communication barriers.

Scalability

Orchestration frameworks scale effortlessly with growing data volume, handling complex multi-cloud or hybrid environments.

Governance & Observability

Built-in monitoring, logging, and governance features improve transparency and compliance across data workflows.

The Core Components of Data Orchestration

Every orchestration platform whether Apache Airflow, Prefect, or Dagster manages a few essential responsibilities:

Scheduling: Decide when data jobs run (hourly, daily, event-based).

Dependency Management: Ensure jobs run in the right order.

Monitoring & Alerts: Track pipeline health and flag failures.

Scaling & Execution: Handle workloads across distributed systems.

Integrations: Connect seamlessly with tools like Snowflake, dbt, or Kafka.

Together, these functions create a resilient, automated data pipeline framework that keeps your data ecosystem in sync.

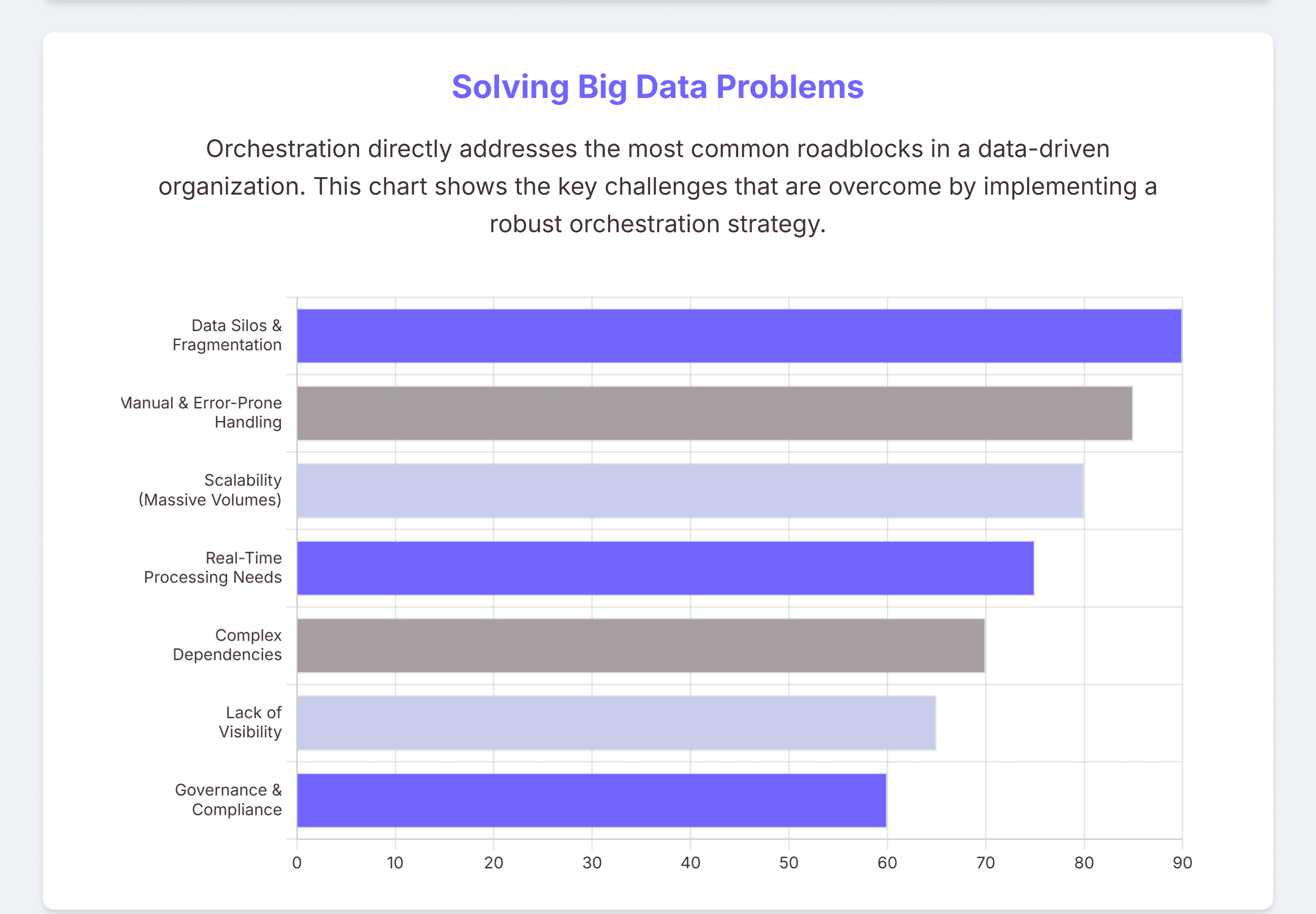

Big Data Challenges Overcome by Data Orchestration

In the era of instant insight, orchestration keeps data in motion.

Data Silos and Fragmentation

In large organizations, data lives across multiple systems and tools. Data orchestration breaks down silos by connecting diverse data sources into unified, accessible Workflows of the Future.

Manual and Error-Prone Data Handling

Manual ETL jobs or scripts are time-consuming and risky.

Orchestration automates ingestion, transformation, and delivery, reducing human error and operational overhead.

Scalability Across Massive Data Volumes

Big data workloads often exceed the capacity of traditional ETL systems. Orchestration platforms dynamically scale pipelines to handle increasing volume and velocity.

Real-Time Data Processing Needs

Businesses now need live insights, not next-day reports. Data orchestration enables real-time and event-driven processing, ensuring data is always up to date.

Complex Dependencies and Workflow Management

Big data pipelines involve multiple dependent tasks and systems. Orchestration manages dependencies, ensuring jobs execute in the right order without bottlenecks.

Lack of Visibility and Observability

Monitoring large-scale data operations can be opaque and reactive. With orchestration, teams gain real-time visibility, alerts, and lineage tracking for proactive issue resolution.

Governance and Compliance Challenges

Big data environments often struggle with access control and auditability. Orchestration enforces governance policies and tracks lineage for compliance with regulations like GDPR or HIPAA.

The 3 Steps of Data Orchestration

A simple orchestration process follows three stages:

Ingest – Collect data from various sources.

Transform – Clean, normalize, and enrich it.

Deliver – Send it to analytics tools or warehouses.

Each stage relies on automation and metadata tracking to ensure consistency, quality, and speed.

The Framework Behind a Robust Data Orchestration Process

A robust orchestration framework includes:

Workflow Design (defining DAGs and dependencies)

Scheduling Logic (event or time-based triggers)

Observability Layer (monitoring performance and lineage)

Governance Controls (ensuring compliance and access management)

This combination forms the operational backbone of modern, data-driven enterprises.

Data Orchestration vs ETL

While both data orchestration and ETL (Extract, Transform, Load) automate data movement, they differ in scope.

ETL focuses on data transformation pipelines.

Data orchestration manages the entire ecosystem — including ETL, analytics, and monitoring.

Simply put, ETL is one instrument in the orchestra; orchestration conducts the entire symphony.

Data Orchestration vs Data Automation

Data automation executes single, repetitive tasks (like updating a dashboard).

Data orchestration, however, coordinates multiple automated tasks into one unified, intelligent workflow.

Automation is efficiency; orchestration is strategy.

Data Orchestration vs Data Visualization

Visualization tells stories with data; orchestration ensures the story’s source is fresh and reliable. Without proper orchestration, dashboards display outdated or inconsistent insights.

How to Choose the Right Data Orchestration Tool

When evaluating orchestration platforms, consider:

Ease of Use – Visual or low-code interfaces simplify adoption.

Scalability – Handles growing data volumes efficiently.

Integration Capabilities – Works with your existing stack.

Observability – Real-time monitoring and alerting.

Security & Governance – Role-based access and encryption.

Cost Flexibility – Pay for usage, not licenses.

Challenges & Common Pitfalls in Implementing Data Orchestration

Common challenges include:

Over-automation without validation.

Poor documentation and lack of visibility.

Siloed ownership within engineering teams.

Limited observability or governance.

Misalignment with business goals.

Start small! Orchestrate one workflow, document everything, then scale confidently.

The Role of Observability & Governance in Data Orchestration

Observability provides transparency — you can trace data movement, detect latency, and fix failures.

Governance ensures compliance — defining who accesses, modifies, or distributes data.

Together, they build trust.

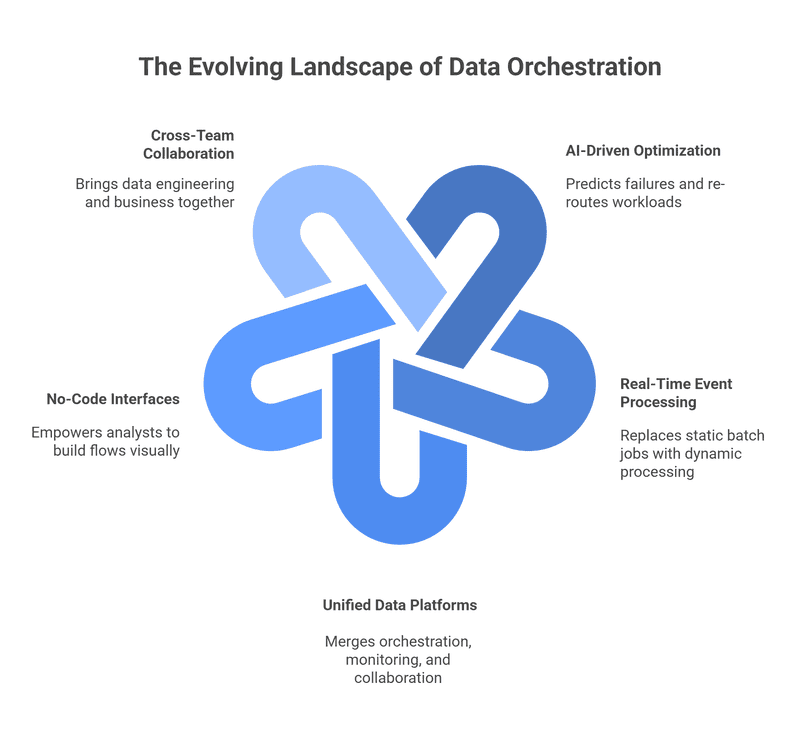

The Future of Data Orchestration: Trends & Innovations

Next-gen orchestration is moving from automation to intelligence:

AI-Driven Optimization predicts failures and re-routes workloads.

Real-Time Event Processing replaces static batch jobs.

Unified Data Platforms merge orchestration, monitoring, and collaboration.

No-Code Interfaces empower analysts to build flows visually.

Cross-Team Collaboration brings data engineering and business together.

How Organizations Making the Most Out of Their Data Using Zigment

With Zigment, data doesn’t wait — it works with you.

At the end of the day, orchestration is valuable only when it drives action.

That’s where Zigment bridges the gap, transforming traditional orchestration into intelligent collaboration.

By unifying structured and conversational data, Zigment helps organizations move from data management to data momentum, where every interaction learns, adapts, and responds in real time.

This is how modern organizations are making the most out of their data with Zigment.