The Rise of Agentic AI Demands Smarter Data Strategies

Your data strategy is broken. And AI agents are about to expose every crack.

Here's what's happening right now. AI agents aren't just analyzing data anymore. They're acting on it. Making decisions. Triggering actions. Operating autonomously across your entire business ecosystem. And your legacy data infrastructure? It's choking under the pressure.

The Data Crisis Nobody Saw Coming

Traditional data strategies were built for humans who review dashboards weekly and make decisions in meetings. Agentic AI doesn't work that way.

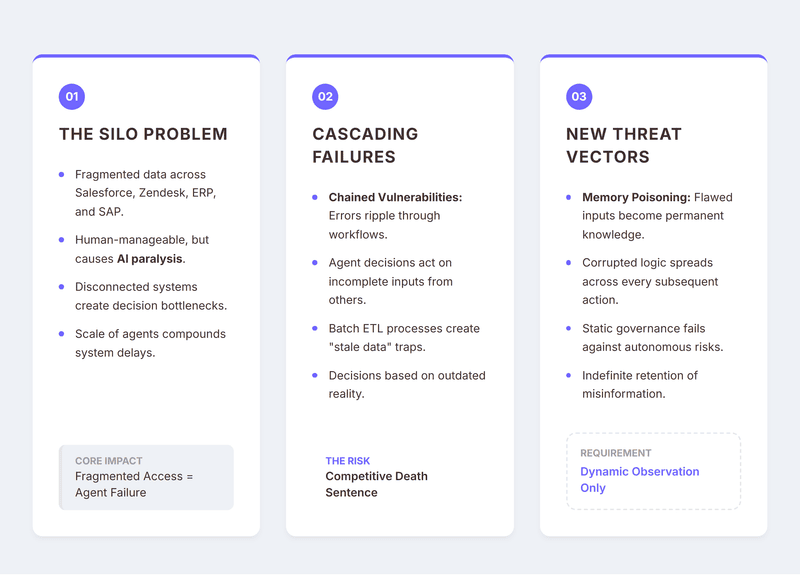

The Silo Problem

Your data sits fragmented: sales in Salesforce, support logs in Zendesk, inventory in your ERP, financials in SAP. For humans, that's manageable. For autonomous AI agents, it's paralysis.

When an agent needs data from three systems to make one decision, every delay compounds. Every disconnected database becomes a bottleneck.

Cascading Failures

Disconnected data creates "chained vulnerabilities." One agent decides based on incomplete information. Passes it to another agent. That agent acts on flawed data. Errors cascade through your entire multi-agent workflow.

Traditional ETL processes transform data in batches. By the time data reaches your agents, it's stale. Stale data means decisions based on outdated reality a competitive death sentence in fast-moving markets.

New Threat Vectors

Static governance can't protect against AI-specific risks like memory poisoning when agents learn from flawed inputs and retain corrupted knowledge indefinitely, spreading misinformation across every subsequent decision.

Real-Time Pipelines: The New Baseline for Survival

Forget batch processing. The future is streaming. And it's not optional anymore.

Smart businesses are replacing old ETL infrastructure with streaming architectures. Tools like Apache Kafka and Apache Flink. Systems that process data the moment it's created.

Why does this matter? Because agentic AI needs to act now. Not tomorrow. Not in an hour. Now.

Picture this: A customer complains on social media. Your AI agent detects the sentiment in real-time. Pulls their purchase history instantly. Checks inventory availability immediately. Generates a personalized response—all in seconds.

Gartner's research is crystal clear: By 2026, enterprises using real-time data pipelines will outpace competitors by 3x in decision speed. Three times faster. That's market domination.

Walmart uses streaming data to optimize inventory across 10,000+ stores in real-time. The result? 10% reduction in stockouts and 5% improvement in inventory turnover. That's millions in recovered revenue.

Event-driven architectures cut latency from hours to milliseconds. McKinsey research shows companies implementing this see 25-40% improvement in operational efficiency.

Unified Data Management: Breaking Down the Silos

Here's where most businesses are getting it catastrophically wrong: they're trying to feed agentic AI from fragmented data sources.

Agentic AI demands unified data management. Not just centralized storage but intelligent data fabric architectures that create a single source of truth across your entire organization.

Think of it as building a nervous system for your business. Every department, every system, every data point connected through intelligent data management platforms that understand context, relationships, and business logic.

This is where unified customer profiles become game-changers. Instead of having customer data scattered across fifteen systems, you create one comprehensive, real-time profile that every AI agent can access instantly.

Salesforce, Adobe, and Segment are pioneering Customer Data Platforms (CDPs) that aggregate data from every touchpoint.

When your marketing AI agent needs customer information, it doesn't query five databases it accesses one unified profile with complete purchase history, interaction data, preferences, and behavioral patterns.

The impact is immediate. Netflix's recommendation AI agents work because they have unified viewing profiles.

Amazon's product suggestion agents dominate because they have unified purchase and browsing profiles. Spotify's playlist AI agents create magic because they have unified listening profiles.

But unified data management goes beyond customer profiles. It's about creating unified product data, unified supply chain data, unified financial data every business domain gets its own unified view.

Orchestration Layers: The Control Center for Multi-Agent Systems

Now here's where it gets really sophisticated. You can't just unleash dozens of AI agents and hope they coordinate themselves. You need an orchestration layer.

Think of it as air traffic control for your Agentic Architecture. The orchestration layer manages which agents access what data, when they act, how they communicate, and how they handle conflicts.

Modern agentic AI platforms like LangChain, AutoGPT, and Microsoft's Semantic Kernel provide this orchestration. They create workflows where multiple specialized agents collaborate on complex tasks.

Let's break down a real example: A customer requests a product return.

Agent 1 (Customer Service) receives the request and validates customer identity using the unified customer profile.

Agent 2 (Policy) checks return eligibility against company policies and purchase date.

Agent 3 (Inventory) confirms the product can be restocked or needs disposal.

Agent 4 (Finance) calculates refund amount and processes the transaction.

Agent 5 (Logistics) generates a return shipping label and schedules pickup.

All of this happens in seconds, not days. Because the orchestration layer coordinates data flow between agents, ensures each has the information it needs exactly when it needs it, and maintains state across the entire workflow.

The orchestration layer also handles failure gracefully. If Agent 3 can't access inventory data, the orchestration layer doesn't crash the entire workflow it routes around the problem, notifies human operators if needed, and keeps the customer experience smooth.

Airbnb uses orchestration layers to coordinate pricing agents, availability agents, recommendation agents, and fraud detection agents—all working on unified property and user data simultaneously.

Governance Frameworks That Actually Work With AI Agents

Your current data governance was designed to keep humans from accessing the wrong data. But AI agents don't respect traditional permission boundaries.

AI agents share information dynamically. They collaborate. They pass data between each other constantly. And your static governance rules? They can't keep up.

Researchers have documented "cross-agent inference attacks" where malicious actors poison data in one agent, knowing it will spread to connected agents. One compromised data point cascades through your entire Agentic AI ecosystem.

Smart businesses are implementing dynamic classification and tagging. Every piece of data gets metadata about its sensitivity, source, quality, and lineage. As data flows through agent workflows, those tags travel with it.

Lineage tracking becomes critical. Where did this data originate? Which agents touched it? What transformations occurred? When something goes wrong, you need to trace the problem back to its source. Fast.

Zero-trust architectures are replacing perimeter-based security. Never trust, always verify. Even for AI agents.

Every data access request gets evaluated in real-time. Companies like Snowflake and Databricks build these capabilities directly into their platforms. API gateways enforce least-privilege access at runtime agents only get exactly the data they need.

Tools like Collibra and Alation now audit AI agent decisions and map data flows across multi-agent systems, generating compliance reports aligned with NIST AI RMF.

Companies implementing dynamic governance see 60% reduction in data security incidents and 80% faster compliance audits, according to Forrester.

Data Quality: The Make-or-Break Factor

McKinsey estimates that poor data quality erodes $2.6-4.4 trillion in AI value globally. Trillion. With a T.

Human analysts can spot obviously wrong data. They apply common sense.

AI agents? They trust the data implicitly. Feed them garbage, and they'll confidently make terrible decisions at scale.

Agentic systems demand 99.9% clean data. Because errors compound across multi-agent workflows. One agent's mistake becomes the next agent's input. Before you know it, your entire AI ecosystem is operating on corrupted assumptions.

The solution? Automated validation agents that continuously monitor data quality. They scan for anomalies, outliers, inconsistencies, and format errors in real-time, before bad data propagates.

ML-based profiling learns what "good" data looks like for your business. When something deviates from expected patterns, they flag it immediately.

Morgan Stanley uses AI-driven data quality agents to validate market data before their trading algorithms act on it. A single bad data point could trigger millions in incorrect trades.

Companies are also deploying bias-detection agents that scan training data and live data streams for statistical disparities. They catch issues like gender imbalance or racial bias before agents learn from them.

Gartner research shows that businesses improving data quality see 20-25% better AI performance and 30% faster time-to-value.

Your Next Move: Don't Wait for Perfect

Rethinking your entire data strategy feels overwhelming. Legacy systems. Technical debt. Budget constraints.

But here's the reality: Agentic AI isn't waiting for you to be ready.

Start small but start now: Audit your current state. Build one unified profile for your most critical data domain. Implement a basic orchestration layer for one high-value workflow. Add quality controls. Measure everything. Then scale.

Agentic AI is forcing this rethink whether you like it or not. The only question is whether you'll lead the transformation or scramble to catch up.

Your data strategy is broken. The fix is clear. The opportunity is massive. What are you waiting for?