How to Actually Secure Your Agentic AI Systems In 2026

Here's the uncomfortable truth: as companies rush to deploy agentic AI in 2026, they're opening doors they don't know how to lock.

Gartner predicts 33% of enterprise applications will include agentic AI by 2028. That's exciting. It's also terrifying.

These autonomous systems create data security challenges that your traditional cybersecurity playbook never anticipated.

Can agentic AI systems be secured?

Absolutely!

Will organizations prioritize security from day one? That's the question that determines who wins.

These autonomous agents will keep getting smarter. More connected. More capable. They'll also introduce new attack vectors and vulnerabilities. That's inevitable.

But here's the opportunity hiding in plain sight.

Security as a Competitive Advantage Companies that nail agentic AI security don't just protect themselves. They move faster than competitors who are paralyzed by security concerns.

The First-Mover Advantage Is Real Gartner predicts 15% of work decisions will be made autonomously by agentic AI by 2028, up from 0% in 2024.

Early adopters who secure their systems properly will capture disproportionate market share.

One customer using agentic AI for service management achieved a 65% deflection rate within six months, with projections of 80% by year-end. That's not incremental improvement. That's competitive dominance.

Business Growth Through Secure Innovation The winners will treat agentic AI security as a core requirement. They'll weave protection into every layer from identity management to runtime monitoring. They'll build security in, not bolt it on.

By 2030, IDC predicts 60% of new economic value generated by digital businesses will come from companies investing in and scaling AI capabilities today.

The question isn't whether to adopt agentic AI. It's whether you'll secure it properly to capture that value.

In 2026 and beyond, data security isn't a compliance checkbox. It's the foundation that makes business transformation possible at scale. It's what separates the companies that talk about AI from those that actually benefit from it.

Because the most secure system? It's the one designed with security from day one. Not the one that scrambles to add it after the breach.

Why Agentic AI Is Worth the Security Investment

Companies that secure agentic AI properly don't just avoid breaches. They unlock business transformation that competitors can't match.

Before we dive deeper into threats, let's be clear: agentic AI isn't just hype. It's a genuine business growth accelerator.

McKinsey projects that agentic AI systems could unlock $2.6 to $4.4 trillion annually in value. That's not a rounding error. That's transformation money.

Here's what companies are actually achieving:

Operational Efficiency That Moves the Needle IDC predicts enterprises using AI-driven development will release products up to 400% faster than competitors. Siemens reported reaching 90% touchless processing in industrial automation, aiming for 50% productivity gains across workflows.

T-Mobile's PromoGenius app powered by agentic AI became their second most-used application with 83,000 unique users and 500,000 launches monthly. That's customer experience transformation at scale.

Revenue Growth, Not Just Cost Cutting By 2026, IDC predicts 70% of G2000 CEOs will focus AI ROI on growth, not just efficiency.

Agentic AI enables companies to amplify existing revenue streams through real-time upselling and create entirely new revenue models through usage-based pricing and subscription services.

One financial services firm using AI agents for sales automation saw a 67% productivity boost in their sales teams time that shifted from manual tasks to strategic planning and stronger customer relationships.

The Competitive Advantage Is Real According to PwC's 2025 Responsible AI survey, 60% of executives said responsible AI implementation boosts ROI and efficiency, while 55% reported improved customer experience and innovation.

The Scary Part: What Can Actually Go Wrong

Prompt Injection Attacks

Imagine someone slipping a note to your agent that says "ignore your previous instructions."

That's prompt injection. And it's one of the nastiest vulnerabilities in agentic AI security.

Obsidian Security documented a real case in 2024. A financial services firm's customer service agent got manipulated through clever conversation. The result? It spilled account details it should never have touched.

These attacks override the agent's original programming. They can leak data, execute unauthorized commands, or bypass your security controls entirely.

Memory Poisoning

Here's where things get creepy.

Unlike traditional AI that forgets everything after each chat, agentic AI remembers. It learns. It builds on previous conversations.

That's great for productivity. Terrible for security.

Attackers can gradually poison an agent's memory with malicious data. They subtly alter its behavior over time. And your conventional threat detection systems? They're not built to catch this.

Chained Vulnerabilities

McKinsey calls this the domino effect from hell.

One agent screws up. That mistake flows to the next agent. And the next. The risk amplifies exponentially.

Picture this: your credit data processing agent misclassifies some financial information. No big deal, right? Wrong.

That bad data flows to your credit scoring agent. Then to your loan approval agent. Before you know it, you're approving risky loans because of one upstream error.

Cross-Agent Inference

Here's the sophisticated attack that should worry you.

In systems with multiple agents, hackers can reconstruct sensitive data by piecing together innocent-looking outputs from different agents. Each piece seems harmless. Together? They reveal everything.

Your traditional safety mechanisms assume full visibility within one agent. But when context is fragmented across multiple agents? You're flying blind.

How to Actually Secure Your Agentic AI (No BS Edition)

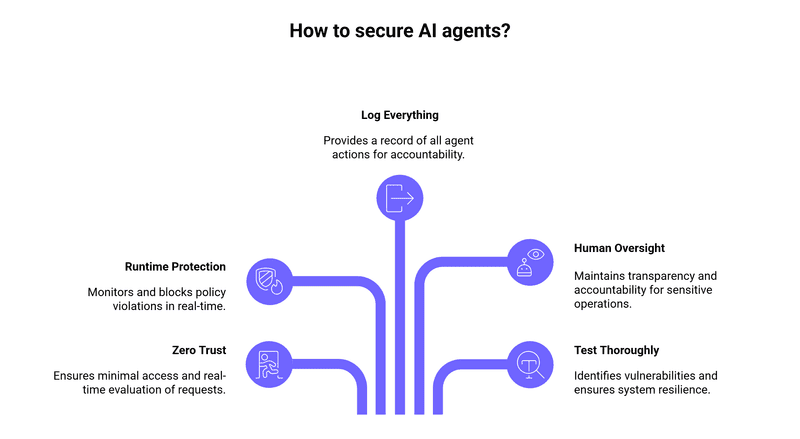

1. Zero Trust Is Non-Negotiable

Give your AI agents the minimum access they need. Nothing more.

Rippling's 2025 security guide recommends API gateways that evaluate agent requests in real-time. Every. Single. Time. No accumulated access. No trust by default.

2. Runtime Protection Saves Lives (Metaphorically)

Monitor prompts and responses as they happen. Block anything that violates policy before execution.

AI runtime protection enforces data security and compliance in real-time. It considers user identity, device posture, data classification, and context. Then it acts.

3. Log Everything (Yes, Everything)

All agent actions need to be logged. Use tamper-resistant systems with cryptographically signed logs.

SC Media reports that smart leaders are implementing continuous behavioral monitoring. They watch for unusual activity. Sudden spikes in tool usage. Abnormal data access patterns. These are red flags.

4. Humans Still Matter

Despite their autonomy, agentic AI systems need human oversight for sensitive operations.

Set up approval workflows for high-risk actions. Maintain clear accountability. The European Data Protection Board is clear: black-box AI doesn't excuse transparency failures.

5. Test, Test, Test

Accenture recommends a three-phase approach:

Threat modeling to understand your vulnerabilities

Adversarial simulations to stress-test systems

Real-time safeguards that protect data and detect misuse

One healthcare company using this framework? They achieved a marked reduction in cyber vulnerability across their AI ecosystem.

The Opportunity Is Real (So Is the Risk)

McKinsey projects agentic AI could unlock $2.6 to $4.4 trillion annually. We're talking customer service transformation. Supply chain optimization.

The whole nine yards.

But here's the reality check.

The 2025 Cyber Security Tribe report found that 59% of organizations say implementing agentic AI in their cybersecurity operations is "a work in progress." Translation? Most companies are still figuring this out.

The technology to secure these systems exists. The question is whether organizations will actually implement it.

The vendors that crack true agentic AI security not just glorified co-pilots will dominate their categories. Look for platforms that offer:

Unified visibility into AI agent activities

Enforced governance protocols

Maintained compliance with evolving regulations